Open Commons First Social Venture Project:

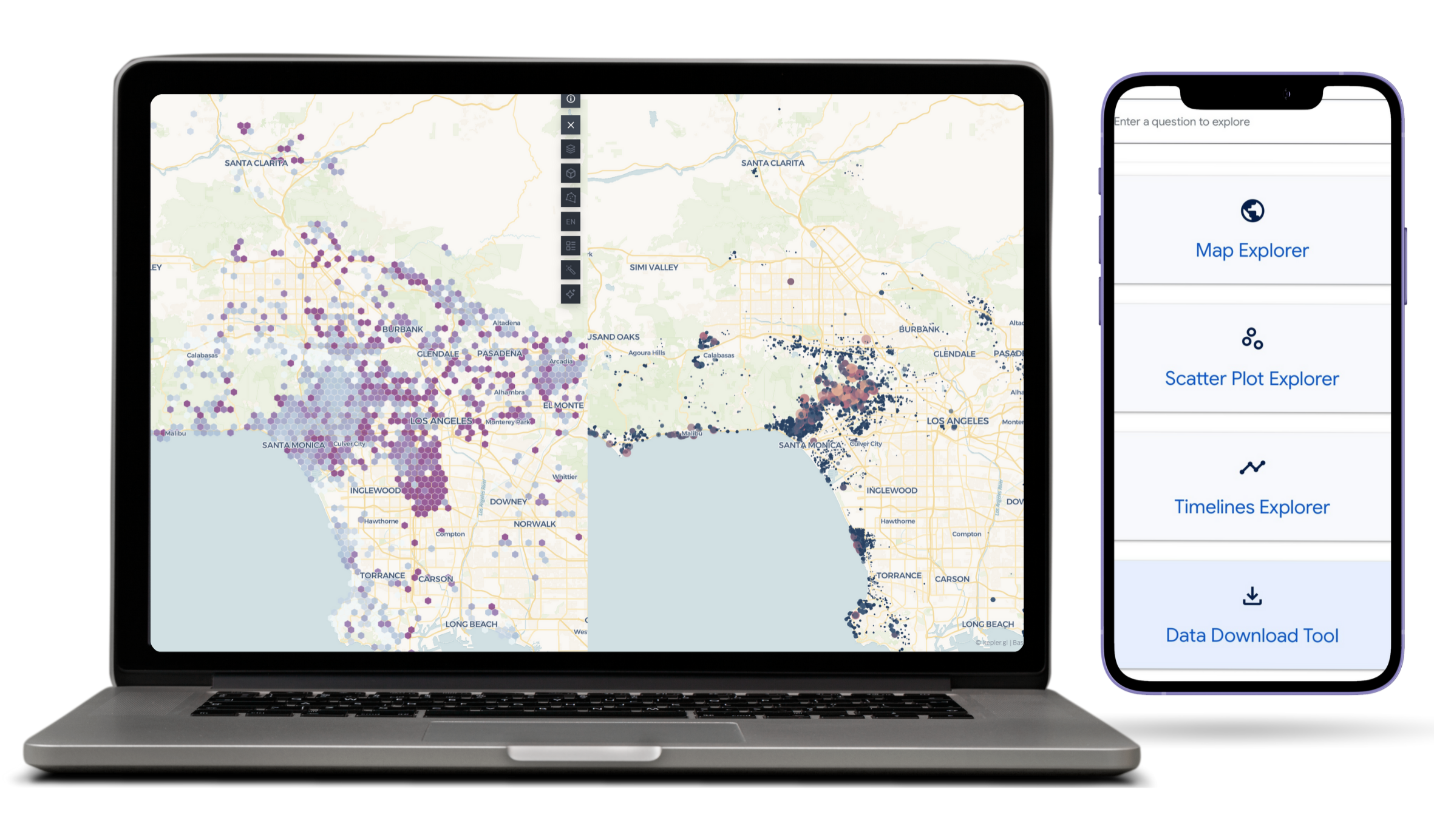

Open.co: A #CivicTach Data Exploration Platform Focused On Hyper-Local Data

Launching

Winter 2026

Launching Open.co is for simple purpose: there is more civic data then the federal level. And we want to bring hyper-local data to the people.

Also we love maps, geospatial analysis, network & complexity sciences. So were going to build a webapp platform & data vending service to bring to life city, county, state, & federal data in new and ways.

Every city holds a quiet archive of the built world. Parcel maps, permit filings, utility layers, zoning overlays, ownership chains, tax rolls. Environmental reports, policy disclosures, budget spreadsheets, ESG signals. Most of it is public. Some of it is open. But nearly all of it lives in isolation — disconnected by format, language, scale, and system.

Those within the Open Commons — researchers, investors, planners, advocates — are left to reconstruct meaning manually, file by file, source by source. What we need now isn’t more data — it’s the connective infrastructure to help those in the Open Commons trace relationships across layers of the built world.

Here is Our Roadmap & Data Progress for a Open Civic Data GIS Mapping Platform.

-

Objectives:

1) Audit 22 U.S. Cities for open data utilized by SOCRATA, ArcGIS, and/or CKAN Platforms

2) Methodology for aggregating data sources (city, county, state)

3) Methodology for original data aggregationActivities:

- Develop coding scripts & scrapers for aggregating data source

- Develop LLMs for classification, as well as manual EDA, and cleaning

- Scale data audit beyond direct open data portals

Deliverables:

- Develop coding scripts & scrapers for aggregating data source

- Develop LLMs for classification, as well as manual EDA, and cleaning -

Objectives & Deliverables:

1) Develop Deepscatter visualization & technical report of U.S. City Data Audit

2) Develop private fork of Google’s Datacommons.org platform for hosting & analyzing data as Minimum Viable Product

3) Begin exploration of Viz.gl, Deck.GL, and custom GLSL to begin custom GIS mapping platform

4) Use tools like Openmappr.org, Nomic Deepscatter, Cosmograph.app to explore UI options how to begin a data search engine for relational & networked data visualization. -

Objectives & Deliverables:

1) Begin development stage in Luma.Gl, Deck.GL, and other Viz.GL/Web.GL libraries to find custom solutions for visualizing massive datasets in network & relational formats

2) Move away from Google’s Datacommons.org platform for Data Hosting & Visualization to custom React/Deck.GL driven web-application.